Governments always face countless challenges - both expected and unexpected.

These include climate change, ageing population issues, adult social-care funding and managing fiscal risk to government due to contingent liabilities. There are also unexpected challenges such as the rapid development of technology and its integration with our everyday lives, and of course … coronavirus.

What the last 20 years has taught us is that the most pressing crisis a government faces at any moment can change very quickly. We must rapidly respond and innovate, by leveraging emerging digital technology.

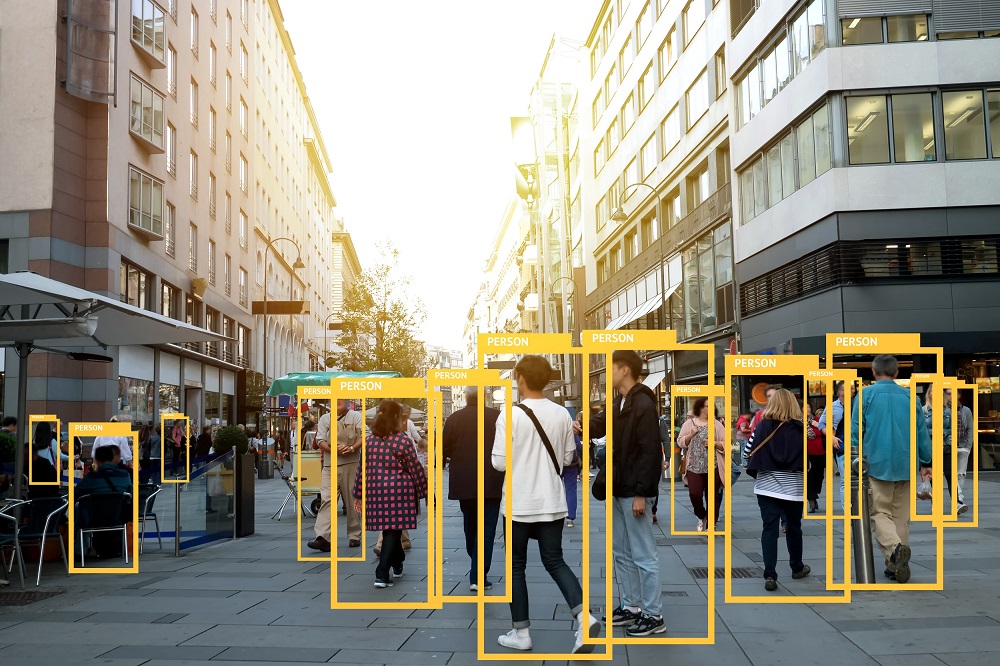

It could be argued that, with digital technology developments, we’re currently going through a 4th industrial revolution. This is evident in the way artificial intelligence (AI) algorithms rapidly develop and disrupt our old ways of working and solving problems.

How government looks at this type of opportunity is a work in progress. We do not fully know how the emergence of 5G combined with cloud/edge-based AI will shape this decade. However, the pandemic has given the government impetus to test the 'art of the possible'.

What algorithms can do for government

Governments make countless decisions each day and it makes sense in principle to use an algorithm to help in the decision-making process. This is to ensure consistency, fairness, speed, efficiency and removal of human bias and error.

Some of these decisions will have a direct noticeable impact on our everyday lives. As an example, algorithms could be used to identify individuals for questioning at the UK border. Others will go on un-noticed in the background, such as fraud-detection algorithms.

The key criticisms thrown against algorithms is they could be:

- biased potentially causing equalities and legal implications

- difficult to interpret and explain to the public

- inappropriate for the given use case

So, it’s vital there is full transparency and accountability when developing such algorithms.

The pandemic has rapidly thrust the use of algorithms in government into the public spotlight. Two cases show how they led to different responses from the public.

Ofqual A-Level algorithm - one that worked but did not command public confidence

After the summer 2020 exams were cancelled, A-level students were expected to be awarded grades based on an assessment of their predicted grades. These were produced by an algorithm developed by the official exam regulator, Ofqual.

It's well known what happened next, with the algorithm being subsequently withdrawn from use and from being used in awarding GCSE grades.

Earlier this year, the Office for Statistics Regulation (OSR) published its lessons learnt from the approach taken to developing this algorithm. The OSR found that ‘it was always going to be extremely difficult for a model-based approach to grades to command public confidence.’

Public confidence was influenced by several factors such as:

- transparency of the model and its limitations

- use of external technical challenge in key decisions

- level of quality assurance

- stakeholder engagement

- a broader understanding of the exams system

The model did what it was supposed to do, which was to find a fair way to maintain standards against historic standards. However, Ofqual couldn’t predict how it would also highlight existing inequalities within the grading system, and the public reaction to it.

QCovid® - how high-quality analytics can make a difference to patients’ lives on a national scale

In May 2020, the Chief Medical Officer for England commissioned a predictive model (QCovid®) for a data-driven approach to assessing an individuals' COVID-19 risk.

Developed by NERVTAG and Oxford University, QCovid® takes a multi-factor approach to risk. It takes into account both demographic and clinical risk factors with an aim of applying it to patient records nationally through a central technological platform. This is used to identify, protect, and prioritise vaccination for high-risk groups (to reduce poor outcomes, enhance patient safety and reduce the burden on GPs).

Stakeholders were at the centre during development, including:

- communicating with decision makers, clinical leaders and experts

- working with the Royal College of General Practitioners to develop e-learning resources

- liaising with the Joint Committee on vaccination and immunisation on vaccine prioritisation

- setting up feedback processes before launch to enable resolution of common queries

What QCovid® provides is an example for centrally led healthcare analytics and decision-making for the future. This is a future where models are built with the involvement of end-users at its very centre and a commitment to openness and accountability.

Public trust is paramount

In 1958, a New York Times article suggested a machine would soon be able to "perceive, recognize and identify its surroundings without human training or control." This affected the public’s perception of AI and it was relegated to research labs for decades.

This type of hesitation was on show recently when plans to start the collection for the General Practice Data for Planning and Research (GPDPR) were permanently put on hold. The GPDPR collection was meant to allow more timely analysis to inform healthcare policy planning. However, more than a million people opted out from being included in the data.

People were concerned about privacy and the susceptibility of data to be linked to other datasets to reveal personal information. This hesitancy led to the collection being shelved to allow further consultation with the public.

Even though we live in a more technologically connected world, the idea of an algorithm determining the outcome of people's lives can be scary. What it shows is the need to be open and communicate the benefits of algorithms to people’s day-to-day lives, while allaying their fears.

Work is ongoing by organisations such as the Centre for Data Ethics and Innovation to help government departments make use of algorithms while maintaining public trust and transparency.

What actuaries can do

As an actuary myself, this instinctively feels outside the profession’s traditional areas of managing and advising on financial risks.

However, our skillset allows us to develop and quality assure such algorithms. We can also translate the technical details to wider policy stakeholders and members of the public. Actuaries can communicate on what each algorithm can and cannot do, the suitability for a given usage case, potential biases and risks, and options for mitigation.

Disclaimer

The opinions in this blog post are not intended to provide specific advice. For our full disclaimer, please see the About this blog page.