We’ve just bought some bathroom scales. They not only measure your weight but also allow your mobile phone to track lots of other metrics such as your body fat percentage and protein rate over time.

But are these measurements correct? We bought these new scales because it turns out that our previous ones were under-reporting weight by around 3kg. As you can imagine, that was a disappointing discovery! (Especially as it didn’t coincide with a similar under-reporting in my height!)

I hadn’t even considered that our scales might be incorrect, especially when showing my weight to the nearest 0.1kg on a digital display. Sure, if I’d been using some antique scales requiring calibration and trying to read an analogue dial, I would expect some margin for error. But not with a digital display!

Does a similar problem affect users of analytical models? Particularly in the era of big data and snazzy artificial intelligence models? Do model users remember to ask the basic questions they’ve applied to modelling projects for many years?

Underlying data

You’ve probably heard the term “garbage in, garbage out” – the concept that analysis based on flawed data (the input) will produce unreliable results (the output.) The process of verifying data has been central to analytical modelling for decades. Do we apply this sufficiently to big data?

As an example, when we rely on online reviews, how much do we consider the self-selecting nature of respondents? Compare this with the stratified random sampling approach which has been a key part of political opinion polling for many years.

Location data is particularly susceptible to being taken at face value. A 2016 BBC News article explained how blank US IP addresses were being mapped by a database provider to a default address which happened to be a farm in Kansas. This led to the farm’s owner being blamed incorrectly for a range of scams!

It’s likely that the original data provider would know that any data mapped to that address would be unreliable. However, subsequent users may not have taken the same care to understand and interrogate the data before relying on it.

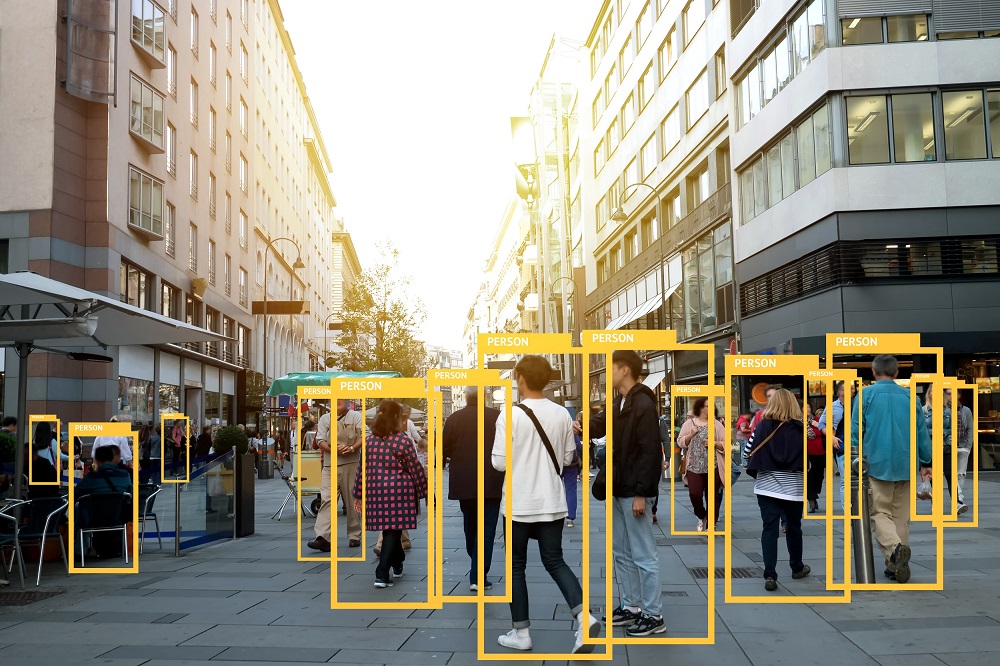

As a further example, the Alan Turing Institute has explained how web-scraping can ultimately lead to facial recognition applications failing to operate properly across diverse populations.

Facial recognition models can be programmed using pictures of people taken automatically at scale from public websites. This approach can limit the images used when programming the technology from diverse populations which were not sufficiently represented in the training data. This is because it depends on which photos were easily picked up, rather than considering systematically whether the approach was sufficiently comprehensive or diverse.

Who thought to ask the basic question of whether the data being used was appropriate for the intended use of the model?

What about the modelling?

Let’s think about trying to forecast the level of the stock market in a year’s time. If I were to show you a single point estimate, I’d expect a healthy discussion about uncertainty: what if future experience differs from the outcome I presented?

What if I instead use a more sophisticated approach, modelling the outcome stochastically, presenting colourful graphs showing the distribution of outcomes and providing 95% and 99% confidence intervals?

In my experience, such analysis helps in conveying there’s uncertainty inherent in future outcomes, but sometimes at the expense of any discussion of model and parameter error. In other words, the discussion can focus on the uncertainty shown by the model at the expense of the uncertainty which is not shown (the chance that the model is wrong.)

With Machine Learning or Artificial Intelligence models, many of us will lack the confidence to discuss model choice in detail. At the same time the models might be described in a way which makes them sound unassailable. The opaqueness of some models can make modelling discussions more difficult, while their reach makes these discussions especially important.

What can I do about this as a model user or owner?

A great starting point is to, as a minimum, ask the same questions that you would with a simpler model. For example:

- Is the data appropriate and complete? Has it been verified?

- Why is this model appropriate? How has it been tested against the real-world system?

- How have the parameters been set? What impact would different parameters have?

- How do the results differ if a different model is used?

- Modellers should be able to explain, at least to some extent, even the most complex models. Complex models can be validated by techniques such as spot tests, reasonableness checks, regular review and stress-testing modelled outcomes for extreme input values.

Machine Learning and Artificial Intelligence models should be interrogated in greater detail than standard models given their data needs and potential reach. For example:

- Has the data been lawfully processed and is it representative?

- How will we know if the model becomes inappropriate over time?

- Will the public understand and accept the model?

Some organisations have developed frameworks to help with such considerations. One such is the Institute and Faculty of Actuaries which published A Guide for Ethical Data Science in collaboration with the Royal Statistical Society.

The Government Actuary’s Department is well placed to help public sector bodies with these issues from its position at the intersection of data science skills and domain-specific experience.

In the meantime, let’s hope my new scales aren’t still under-reporting!

Disclaimer

The opinions in this blog post are not intended to provide specific advice. For our full disclaimer, please see the About this blog page.